Rajan Agarwal

I worked on RL for web agents as a Research Engineer at Amazon AGI Lab. Previously, I built multimodal video editing agents for hollywood at Kino AI and low-level train safety systems at Hitachi Rail.

I am a deeply technical person. I'm constantly building, learning and breaking things. I'm obsessed with learning how things work and designing novel solutions to problems I can't get out of my head. Right now, I'm most curious about multimodal models and coding agents.

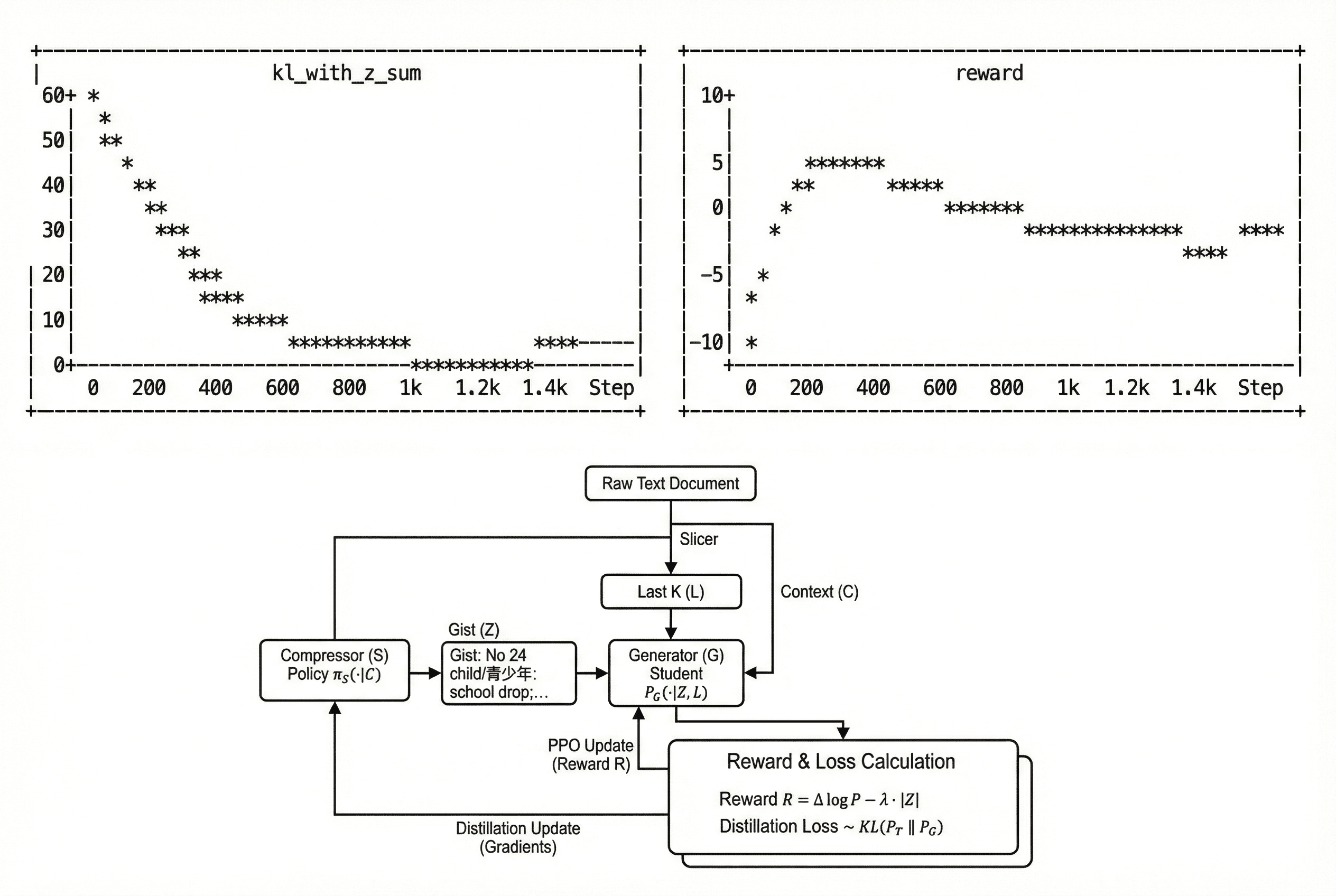

LLMs can invent their own compression

2025As a constrained optimization problem, LLMs can use RL to invent their own compression schemes to increase its context window.

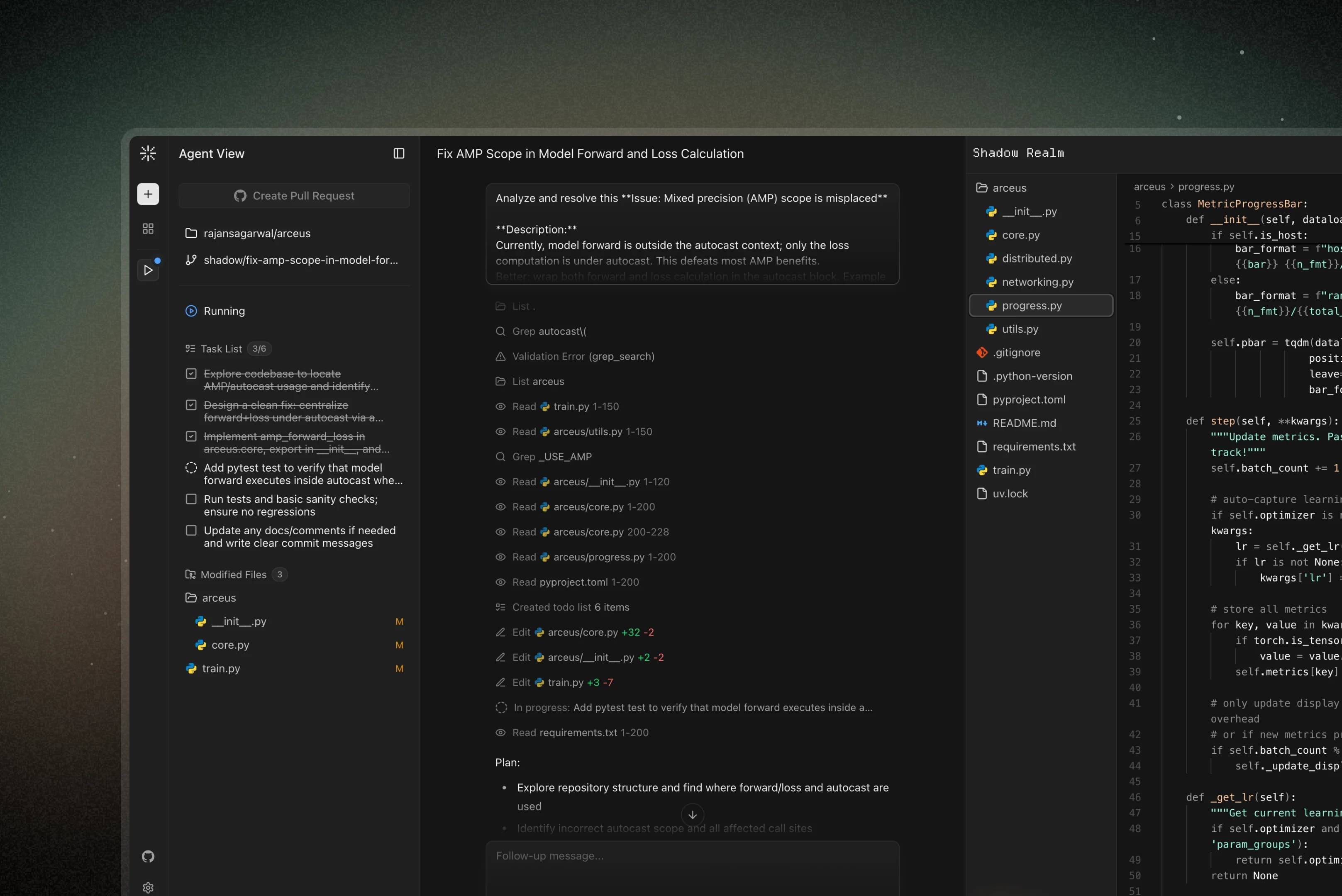

Shadow

2025Open-source background coding agent with 1.4k stars on GitHub. Feature-filled agent that works in a MicroVM with full codebase understanding.

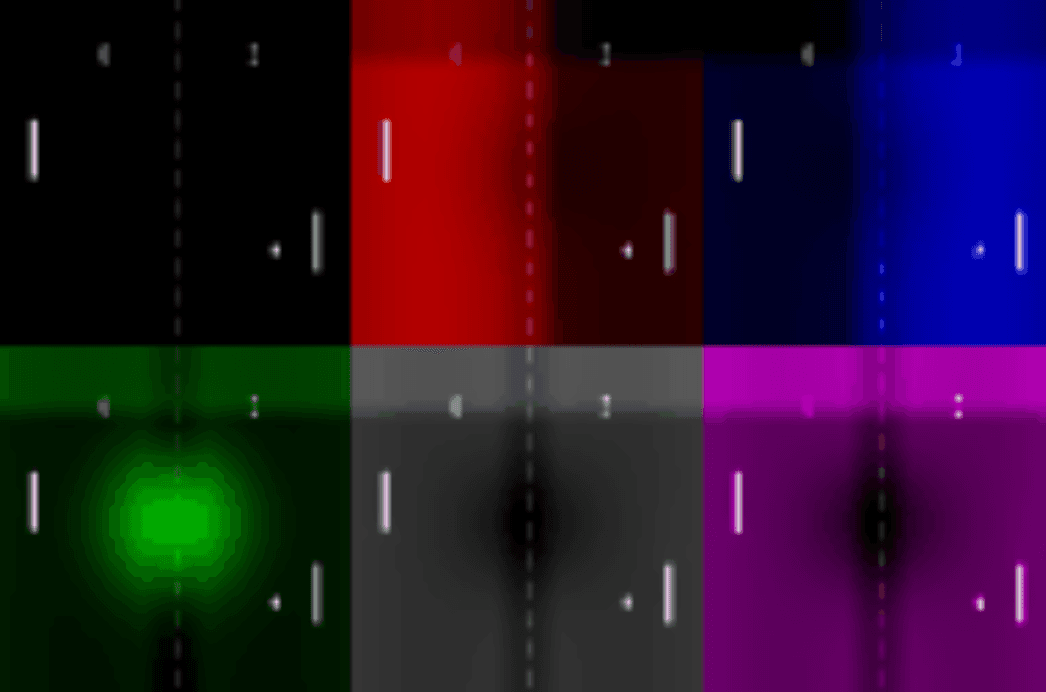

How not to do research

2026Lessons learned from building multiplayer world models. Built a video tokenizer with spatial attention and a dynamics model with action spaces.

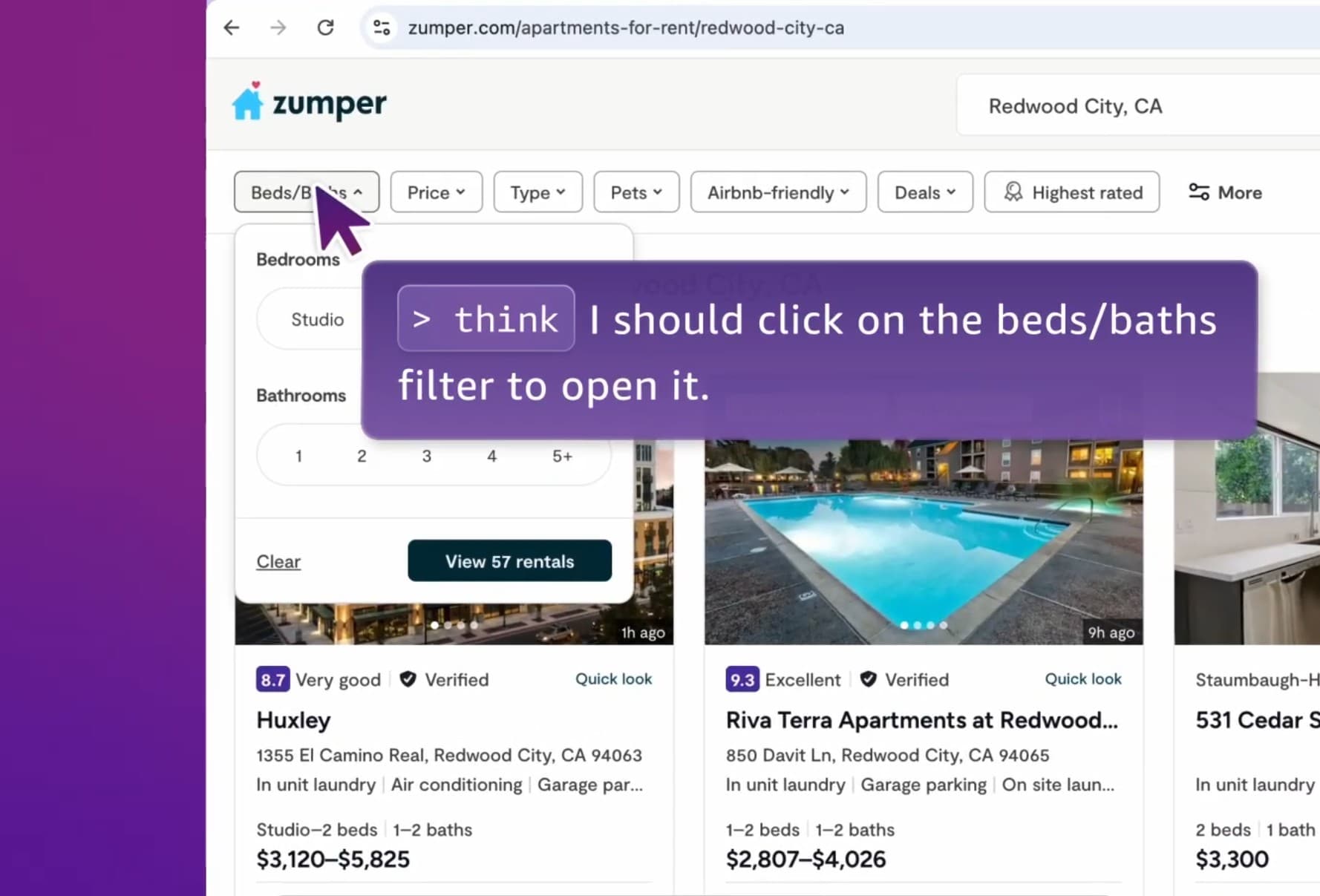

Nova Act: SOTA browser-use model

2025In my internship with Amazon AGI, I worked on RL for a browser-use model. I led model performance on two benchmarks & worked on algorithms/performance.

Natural Deception with RL

2025Language models, when trained on hidden-information games, naturally learn deceptive techniques to win the game by any means.

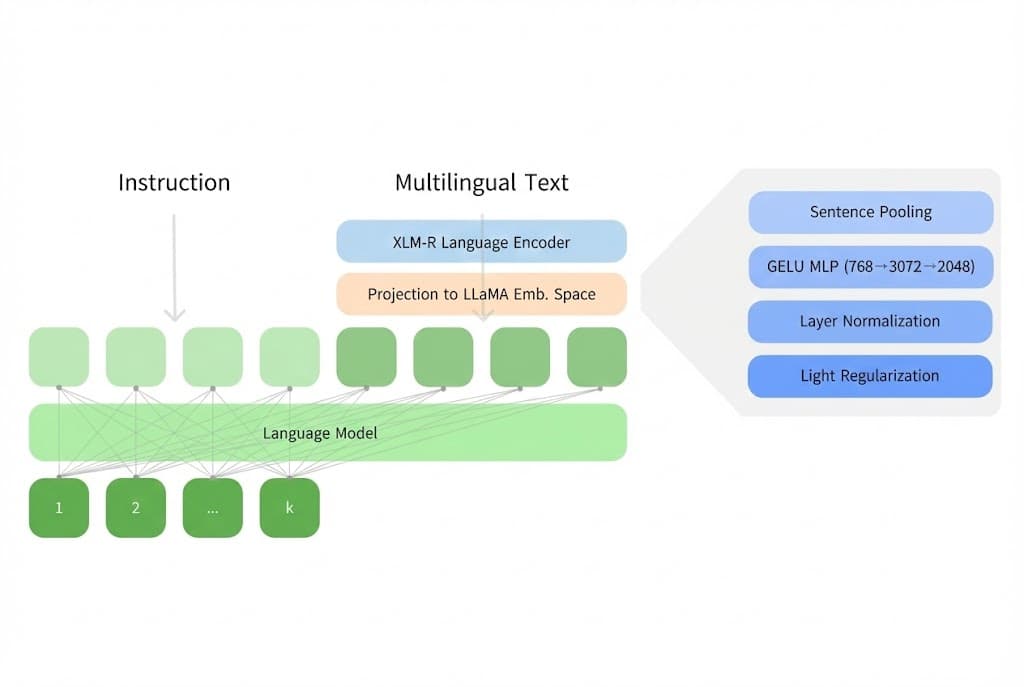

Cross Lingual Alignment

2025Research under Cohere Labs for a compute-efficient post training to represent different languages as modalities for multilingual language models.

Kino AI: Hollywood Video Editing Agent

2025Multimodal agent and long-context video understanding to help hollywood editors. Worked on the infra, video retrieval & the agent.

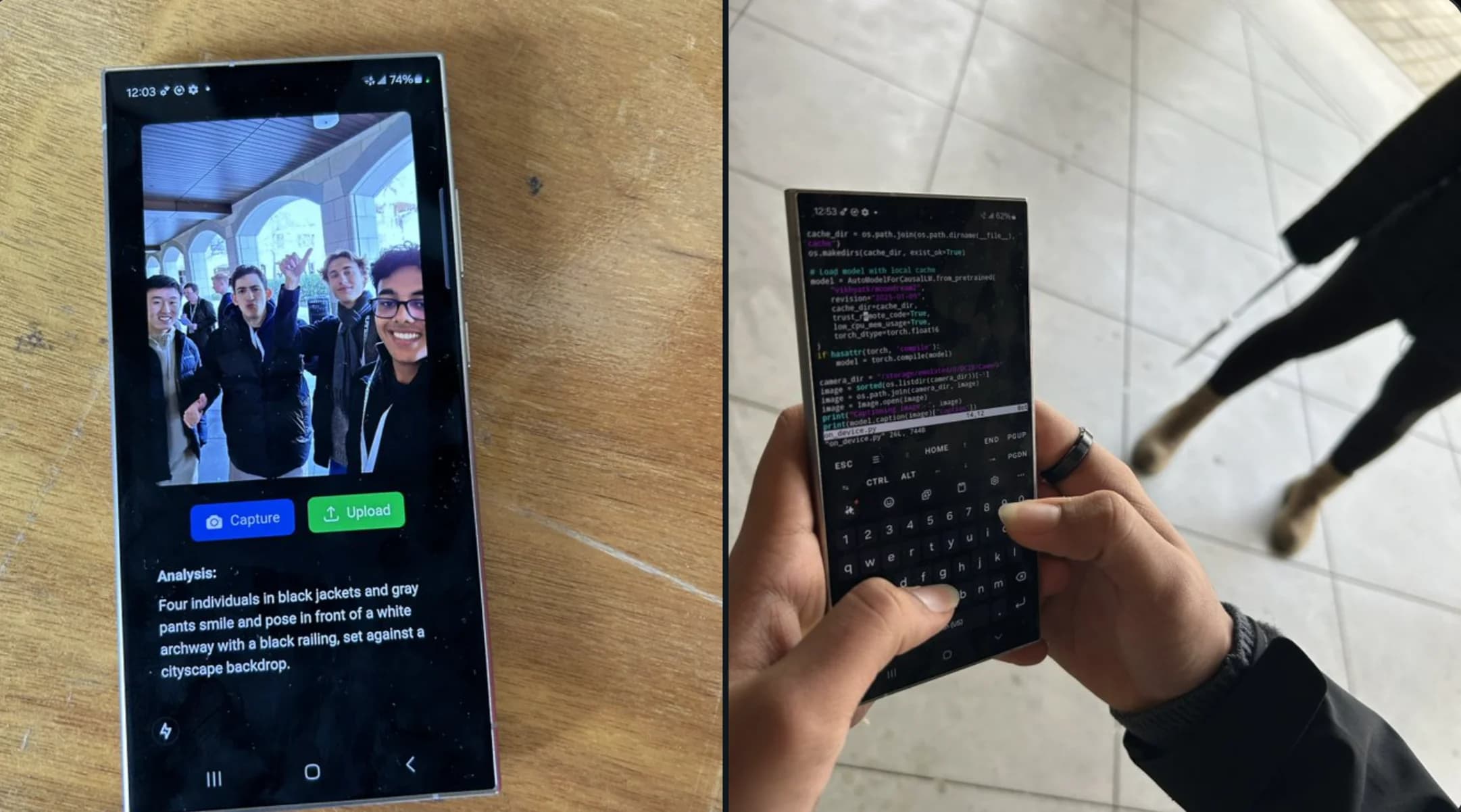

Local VLM on a Samsung Galaxy

2025Tricked a Galaxy S24 to run Moondream 3B VLM locally, with quantization + local linux setup on phone. Built at TreeHacks 2025

Arceus: Distributed Training on Macbooks

2024A decentralized cross-device model training system with model and tensor parallelism to reduce compute needed to train large models.

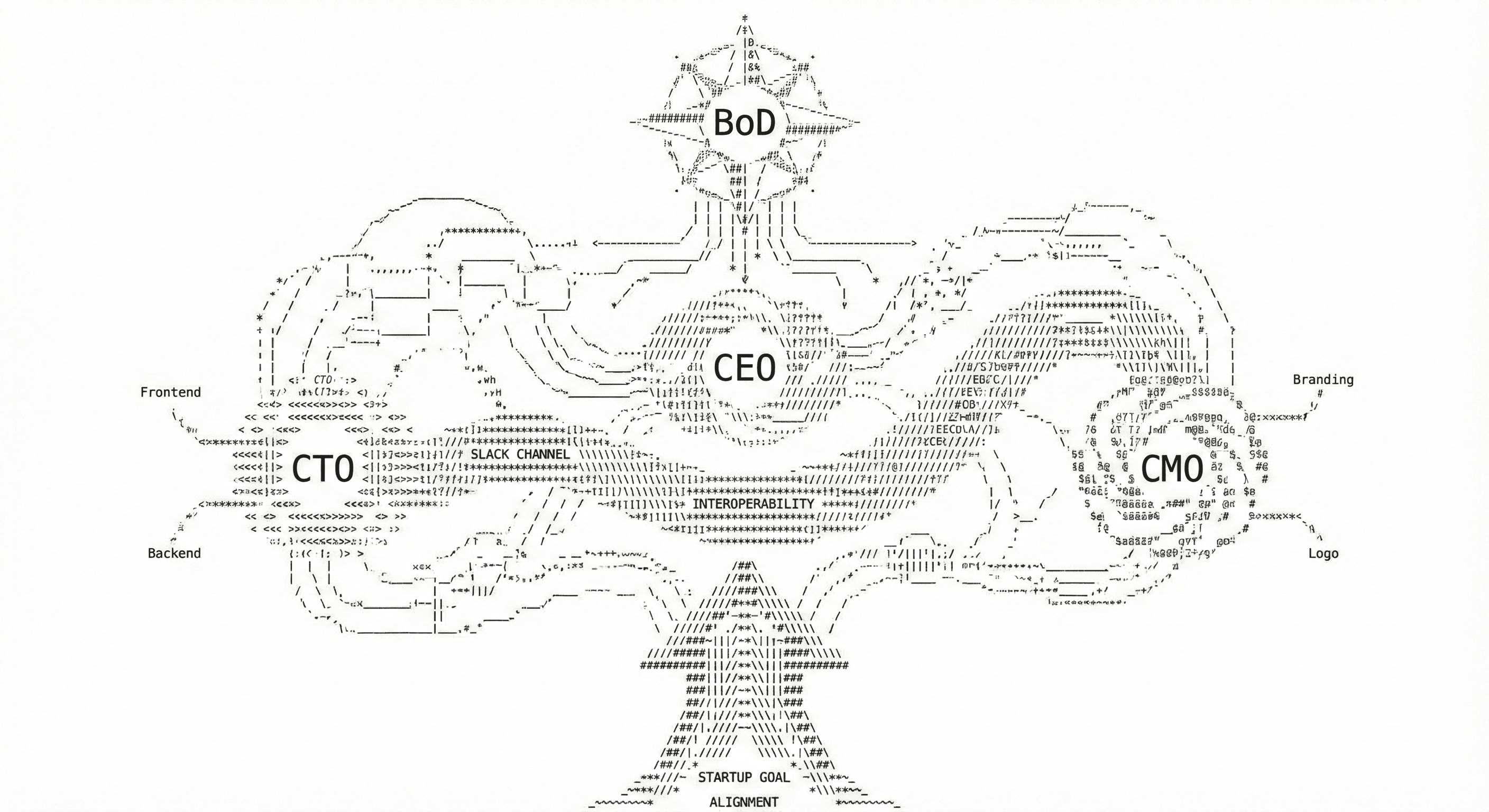

Interoperable Coding Subagents

2024One of the first implementations of coding subagents to work together to solve hard, diverse coding problems.

Multimodal Memory Architecture

2023Long-term memory with multimodal knowledge graphs to search 7 days of video and audio within 5 seconds. Winners @ Hack the North 2023.

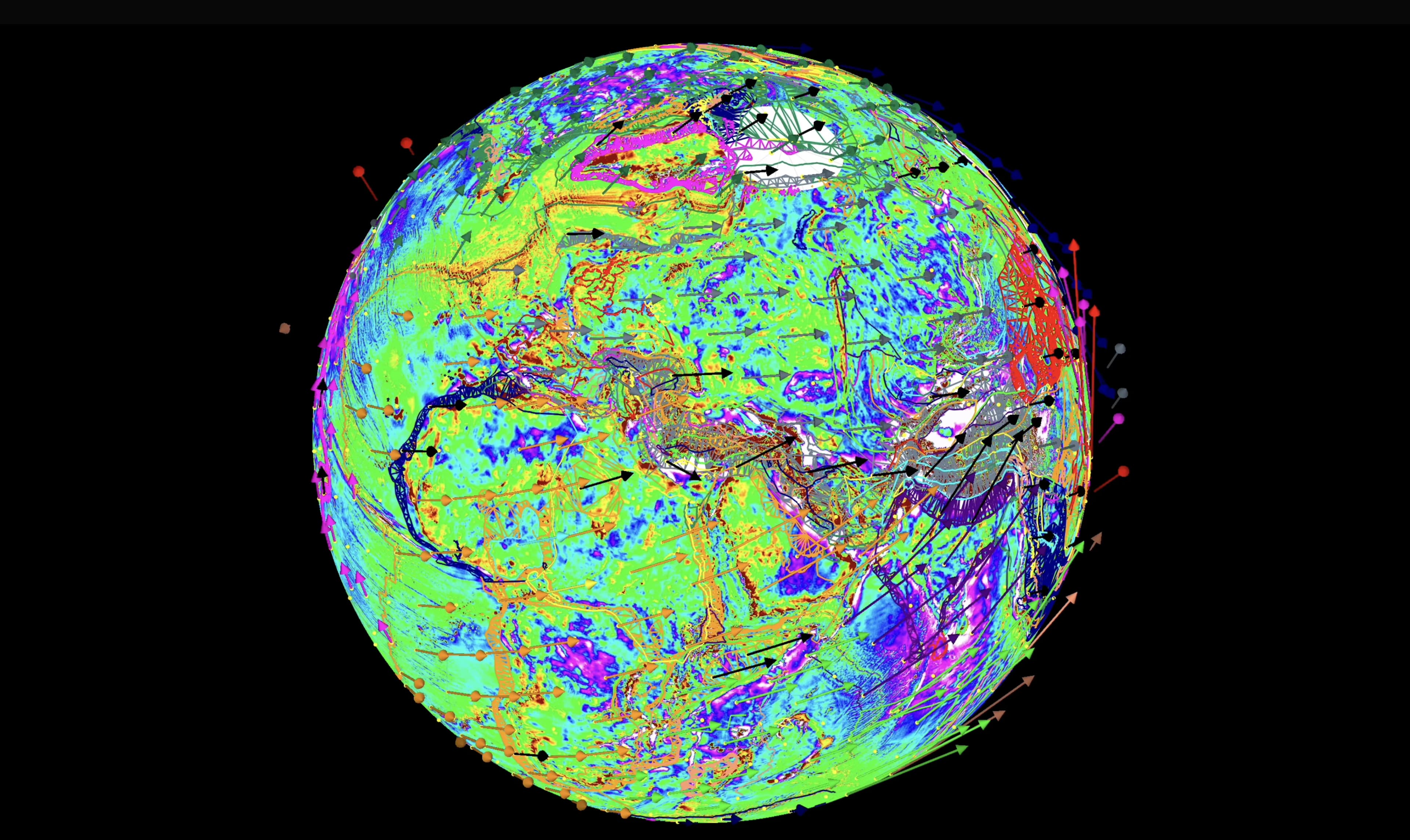

Shapeshift

2023Deep learning analysis of seismic frequencies and local policy to design affordable earthquake-resistant buildings. Worked under RippleX Fellowship, RBCx.

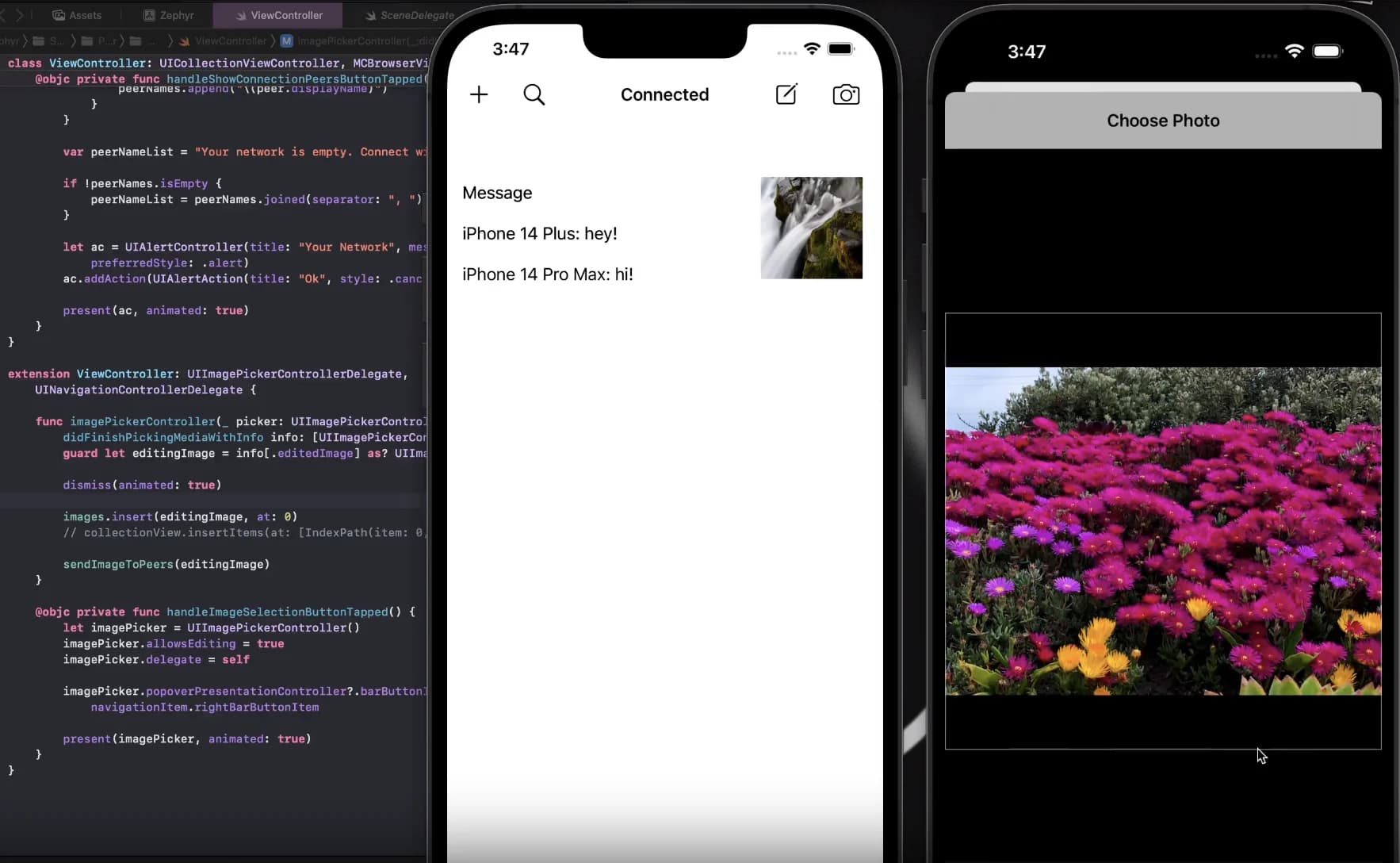

Offline Mesh Network

2022An offline mesh network written in Swift via MultiPeer Connectivity to allow for cross-device transfer of files entirely offline, creating a chain of encrypted nodes.